As artificial intelligence-generated content floods our digital landscape, it's helpful to distinguish between two curious subcategories of flawed output: AI fails and AI slops.

Both refer to subpar results, but they differ in origin, intent, and the reactions they provoke. This blog post explores the differences between them, why they matter, and what they reveal about our evolving relationship with AI-generated media.

AI Fails

AI fails are unintentional errors that usually stem from limitations in an AI's training data or architecture. These failures can be surprisingly revealing. They often showcase the machine's lack of contextual understanding, making it possible to glimpse the scaffolding behind the illusion of intelligence.

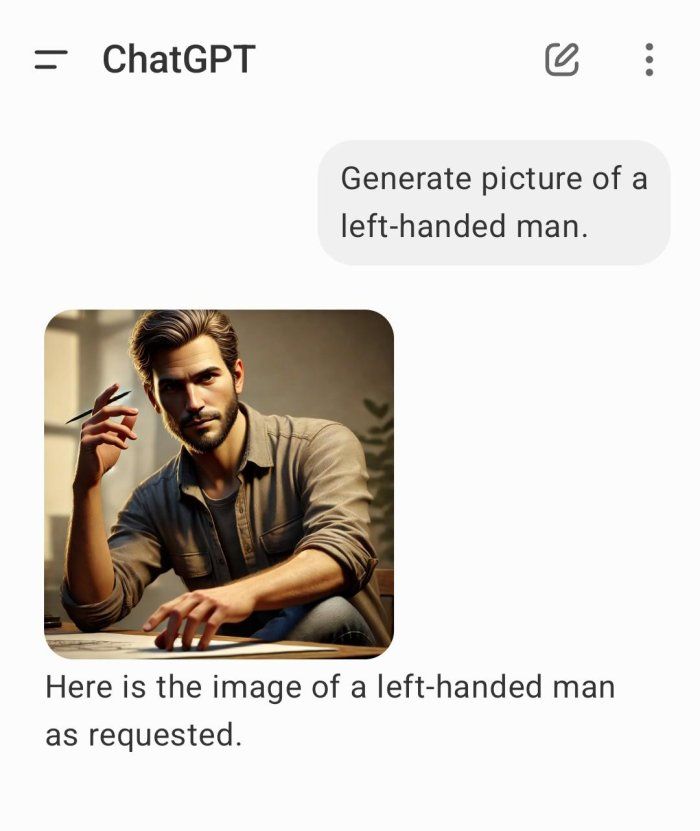

Take, for example, this image of a man who was prompted to be left-handed; yet, the AI model was adamant at generating a man holding a pen in his right hand.

Another example is this AI-generated map of Europe. At first glance, it appears familiar; but then you notice the errors: country names that don't exist, misplaced borders, and an Eastern Europe that melts into a fantasy geography. These kinds of fails are not just funny, but they remind us that AI does not "know" geography. It merely mimics the structure of things it has seen before.

When AI Can’t Play Along

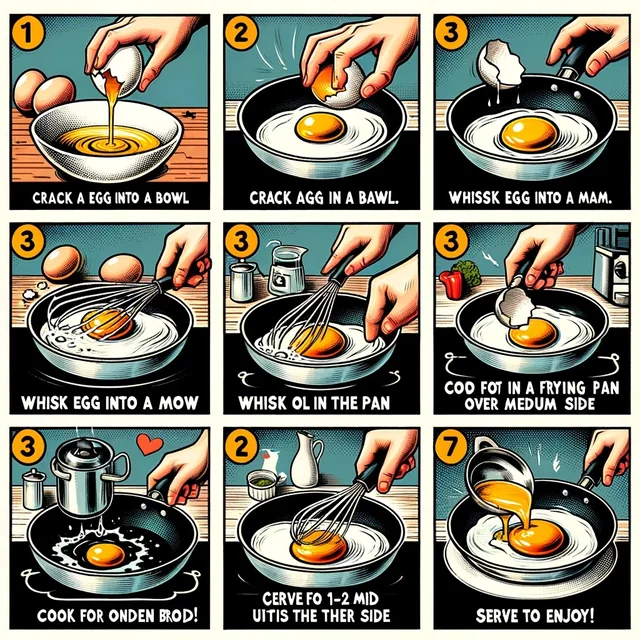

“Where’s Waldo?” is a classic example of a human task that requires both subtlety and restraint. But when you ask an image generator to recreate it, it goes wildly off-track: Waldo appears not once, but repeatedly, flooding the scene in absurd, fractal fashion. The AI doesn’t understand the premise—it just reproduces patterns. Similarly, step-by-step guides like how to cook an egg quickly devolve into floating hands, out-of-sequence numbers, and surreal object placement. The chaos tells us something valuable: AI lacks narrative logic, especially when multiple steps require coherence over time.

AI Slops: The Rise of Spammy Engagement Bait

While AI fails expose technical limitations, AI slops are something else entirely: mass-produced, emotionally manipulative content often designed to flood social media and harvest engagement. These slops are typically low-effort and eerily generic. One viral example is a "269-year-old grandma" beaming in front of a birthday cake. Her face shows a level of age and joy that seems strangely off—but it works. The image tugs at emotional strings and garners thousands of likes and shares. Even if viewers suspect it's fake, many play along.

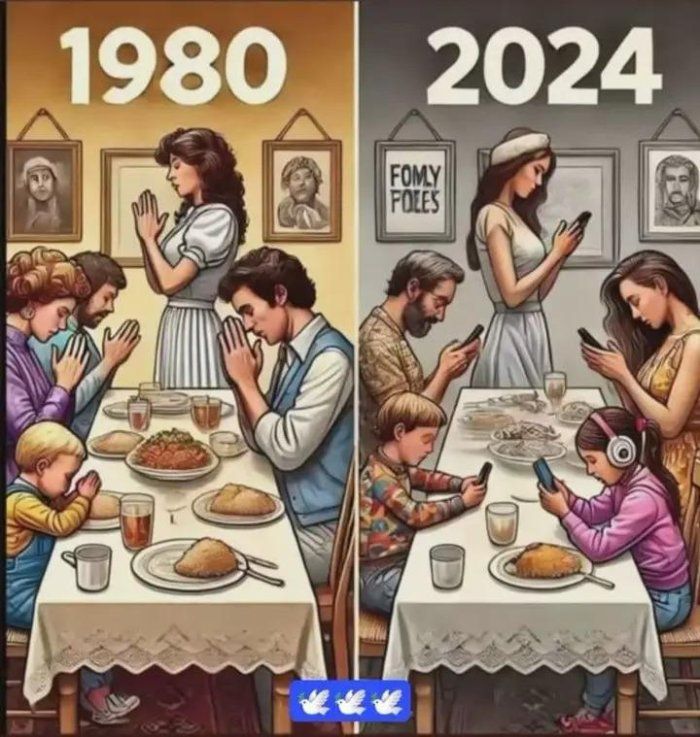

Another now-iconic example of AI slop is the “Family Prayer at the Dinner Table” comparison meme. On the left: a supposedly wholesome scene from “1980,” showing a family with bowed heads praying before dinner. On the right: the “2024” version—everyone at the table staring at smartphones, one girl even wearing headphones. The emotional message is clear: modern life is disconnected and spiritually bankrupt. But look more closely: the food on the plates is strangely blurred, the painting on the wall reads “FOMLY FOLES,” and the hands don’t quite touch. The image combines the manipulative appeal of slop with subtle AI fail artifacts. It’s a perfect example of how these categories can overlap—and how effective they can still be at spreading on social media.

Why It Matters

AI fails invite reflection: they reveal what AI can’t do, and they open a window onto the machine’s non-human logic. They’re often amusing, but also intellectually valuable. Slops, by contrast, raise concerns about quality, authenticity, and manipulation in our digital environment. Both categories are worth documenting—not only because they're fascinating cultural phenomena, but also because they teach us how to live with, question, and understand artificial intelligence in everyday life.

Next time you stumble upon a suspiciously old birthday girl or a guide to boiling eggs that looks more like alchemy, pause for a moment. You’re not just looking at bad content. You’re witnessing the strange and sometimes unsettling edges of AI creativity.