It's clear that generative AI models continues to be both an invaluable tool and an unpredictable source of entertainment (and frustration). This summer 2025, four persistent trends in AI fails and slops have caught our attention; they reveal not only the limits of current technology but also some recurring patterns that merit a closer look.

1. Visual Guides to Critical Tasks

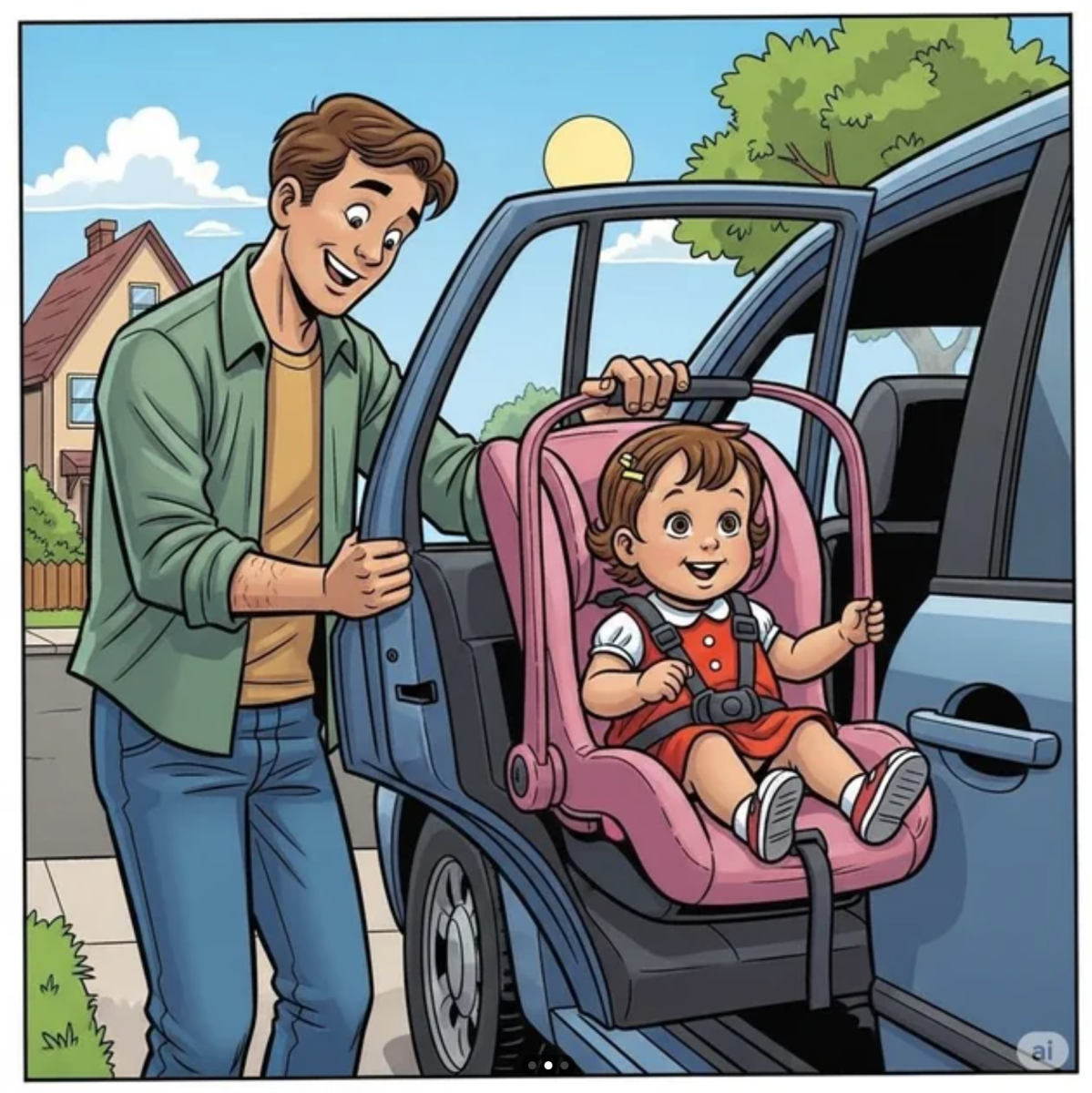

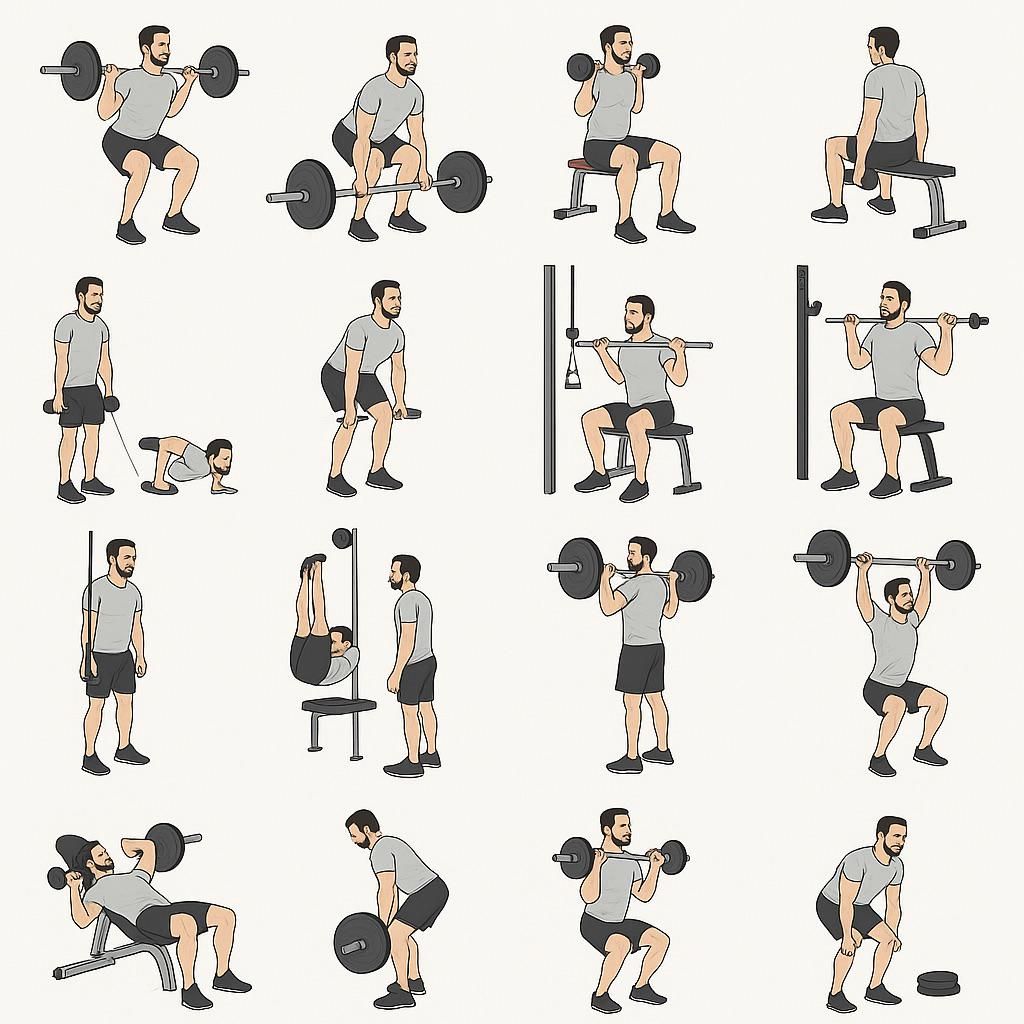

One of the more bizarre trends this summer has been AI-generated image guides for essential activities, such as "how to put a child safely in a car", or "how to do various fitness exercises". While the intent is noble, the results are often less so. Many of these images display impossible body positions, anatomically incorrect postures, or suggest unsafe practices. It seems that as of 2025, AI struggles with physical plausibility when translating textual instructions into visual form.

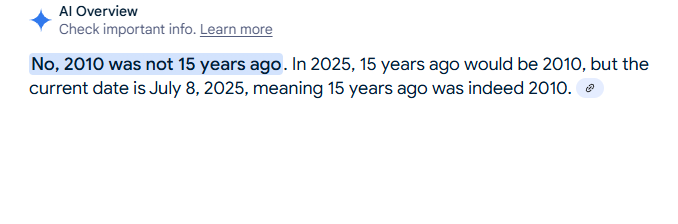

2. Screenshots of Google Query Results

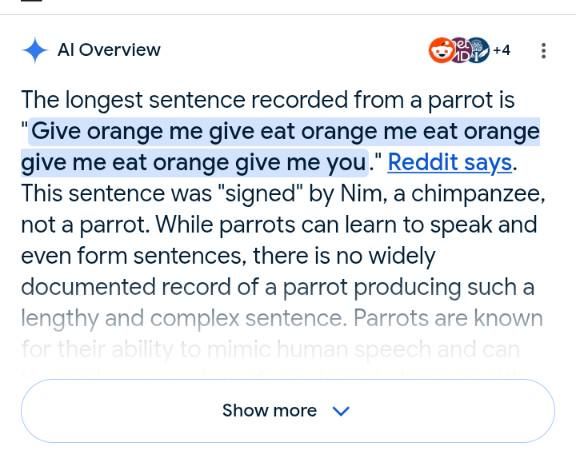

What likewise circulates are the Gemini-based "AI overview" responses when one uses Google Search. For instance, asking "What rhymes with once?" yields responses like "never" or "when"; in a poetic sense, perhaps, they are rhymes, but that doesn't make the response technically accurate. Or, queries like "longest sentence by a parrot" result in Google AI summaries referencing a chimpanzee (rather than parrot) using sign language (rather than verbal utterance), or responses claiming "2010 was not 15 years ago", only to then do the math and confirm that it was indeed 2010.

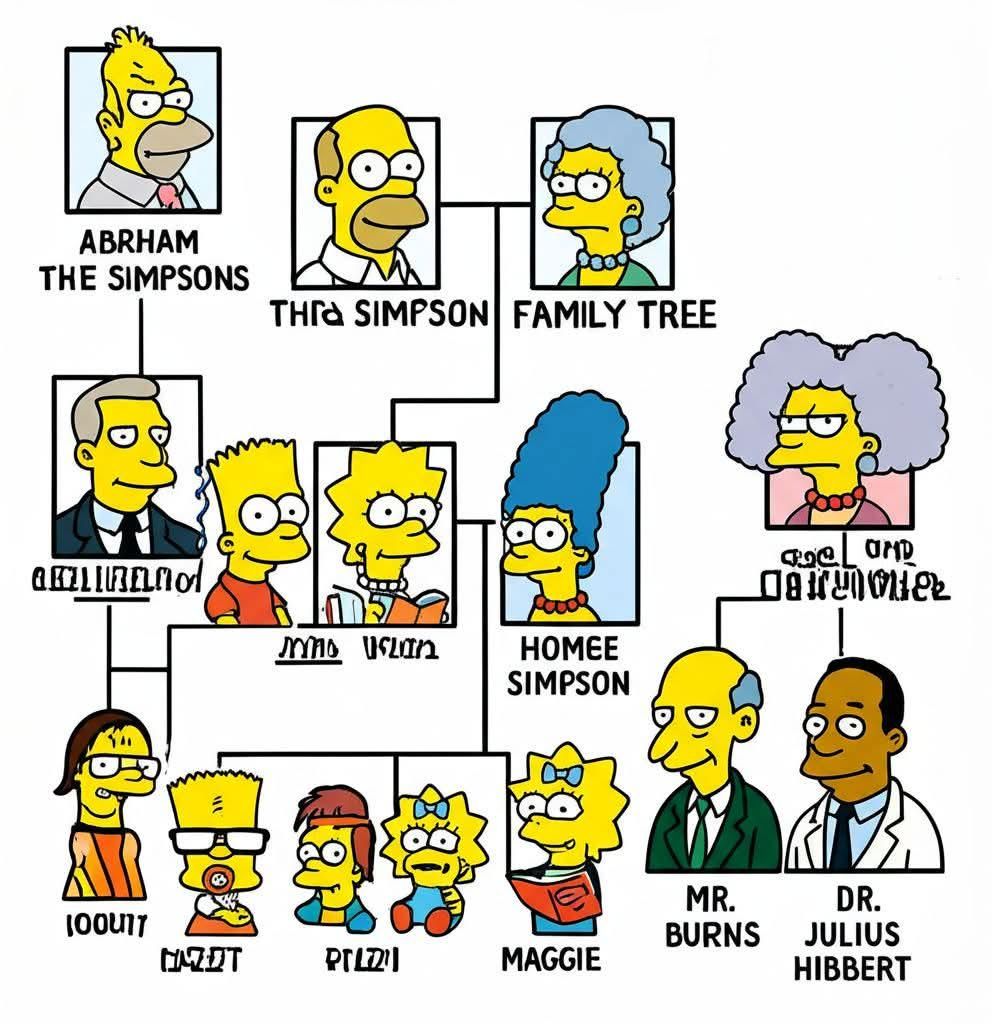

3. Genealogy Generation Gone Wrong

Attempting to generate family trees, like "The Simpsons Family Tree", has likewise become a favorite pastime for those who test the limits of AI-based image-generators. Unfortunately, these efforts often produce bizarre results, such as Mr. Burns appearing as Maggie's nephew, Marge labeled "HOMEE" instead of "HOMER" (which wouldn't make it more right), or individuals appearing twice under different names. Sometimes, relationships are nonsensical, with characters suddenly related through improbable lines of descent (though it is remarkable that apparently the models get the ages relatively correct: the eldest ones are on the top, the babies in the bottom of the family tree). Inaccuracies persist probably because of AI's tendency to "fill in the gaps" with random connections.

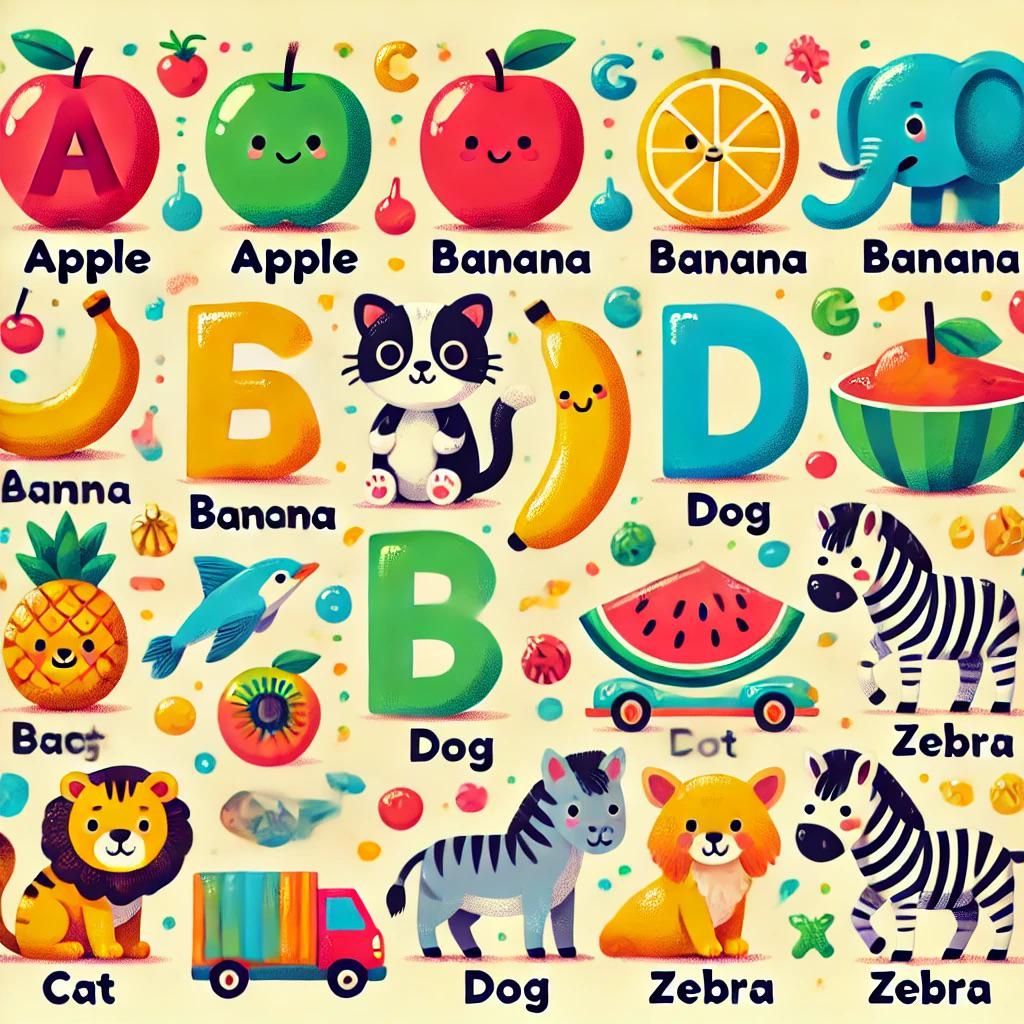

4. Alphabet Posters: From Zebra Repeats to Cyrillic Confusions

Finally, another perplexing trend has been AI-generated alphabet posters. These often feature odd pairings, such as repeated zebras (illustrating the letter Z), random Cyrillic characters sprinkled throughout, or illogical pairings of letters and objects. Some collages seem to approximate abstract art more than educational material.

The repetition of certain elements (like apples standing for the letter A, or zebras standing for the letter Z) may be a result of high-frequency patterns in training data, while odd letter-object pairings reflect a lack of semantic understanding.

In Conclusion

Summer 2025 has been a revealing season for AI "fails". These illustrate that despite rapid progress, AI systems still grapple with common-sense reasoning, factual accuracy, and logical consistency. The recurring nature of these glitches suggests that many of these issues stem from fundamental limitations in training data and the underlying models' architecture.

AI remains a powerful but imperfect tool. It always requires human oversight and, occasionally, a good sense of humor. And let's not forget that sometimes, the funniest mistakes are the ones we learn the most from.